Entropy Based Regularization Improves Performance in the Forward-Forward Algorithm

Published in ESANN, 2023

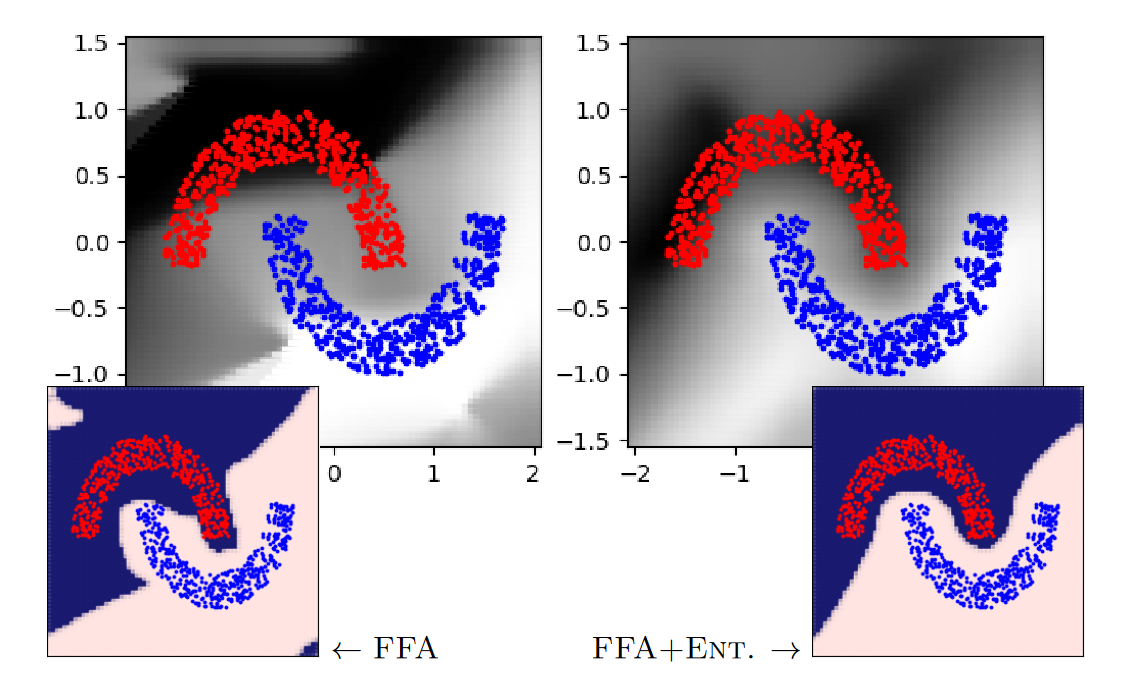

The forward-forward algorithm (FFA) is a recently proposed alternative to end-to-end backpropagation in deep neural networks. FFA builds networks greedily layer by layer, thus being of particular interest in applications where memory and computational constraints are important. In order to boost layers’ ability to transfer useful information to subsequent layers, in this paper we propose a novel regularization term for the layer-wise loss function that is based on Renyi’s quadratic entropy. Preliminary experiments show accuracy is generally significantly improved across all network architectures. In particular, smaller architectures become more effective in addressing our classification tasks compared to the original FFA.

Recommended citation: M. Pardi, D. Tortorella, A. Micheli (2023). "Entropy Based Regularization Improves Performance in the Forward-Forward Algorithm." Proceedings of the 31st European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2023), pp. 393-398.

Read Paper