Continual Learning with Graph Reservoirs: Preliminary experiments in graph classification

Published in ESANN, 2024

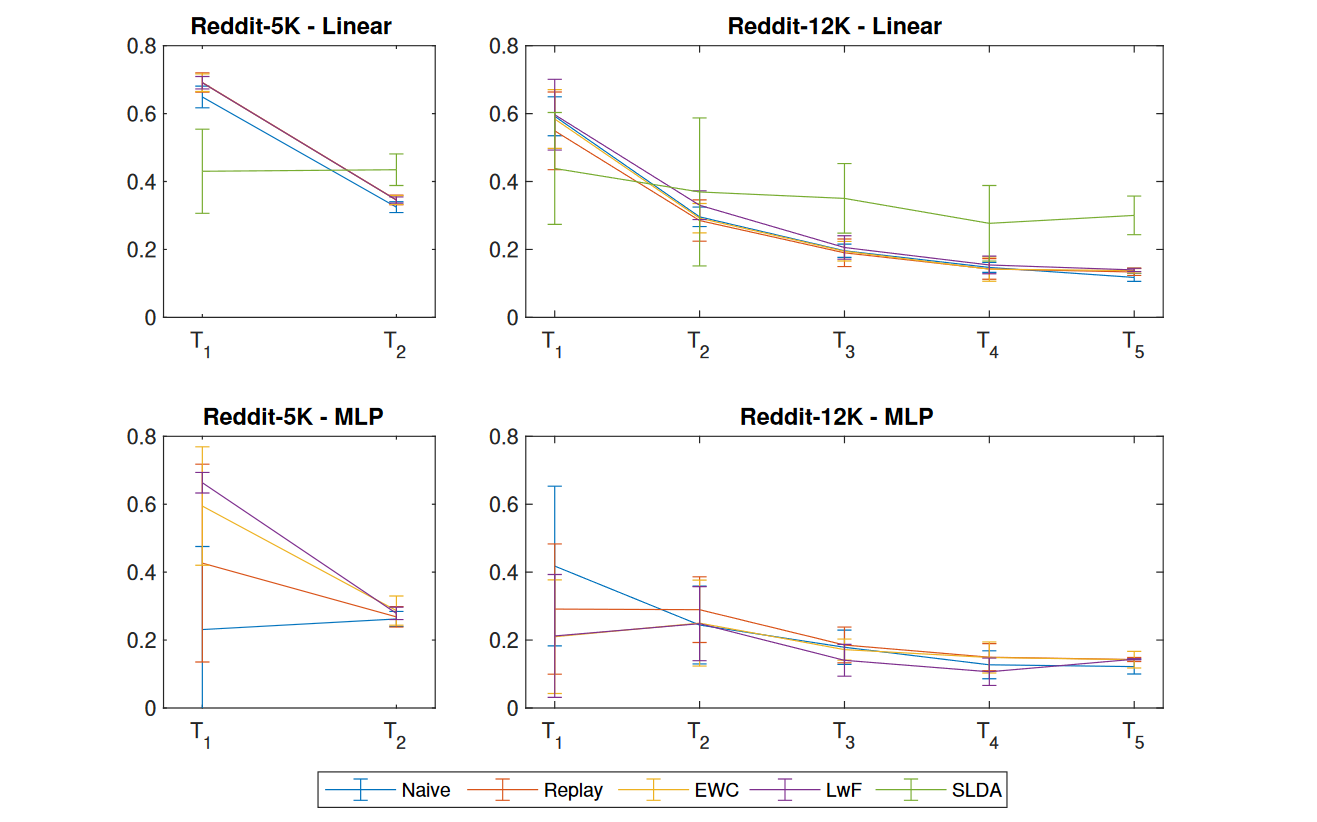

Continual learning aims to address the challenge of catastrophic forgetting in training models where data patterns are non-stationary. Previous research has shown that fully-trained graph learning models are particularly affected by this issue. One approach to lifting part of the burden is to leverage the representations provided by a training-free reservoir computing model. In this work, we evaluate for the first time different continual learning strategies in conjunction with Graph Echo State Networks, which have already demonstrated their efficacy and efficiency in graph classification tasks.

Recommended citation: D. Tortorella, A. Micheli (2024). "Continual Learning with Graph Reservoirs: Preliminary experiments in graph classification." Proceedings of the 32nd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2024), pp. 35-40.

Read Paper