Bridging XAI and spectral analysis to investigate the inductive biases of deep graph networks

Published in Machine Learning, 2025

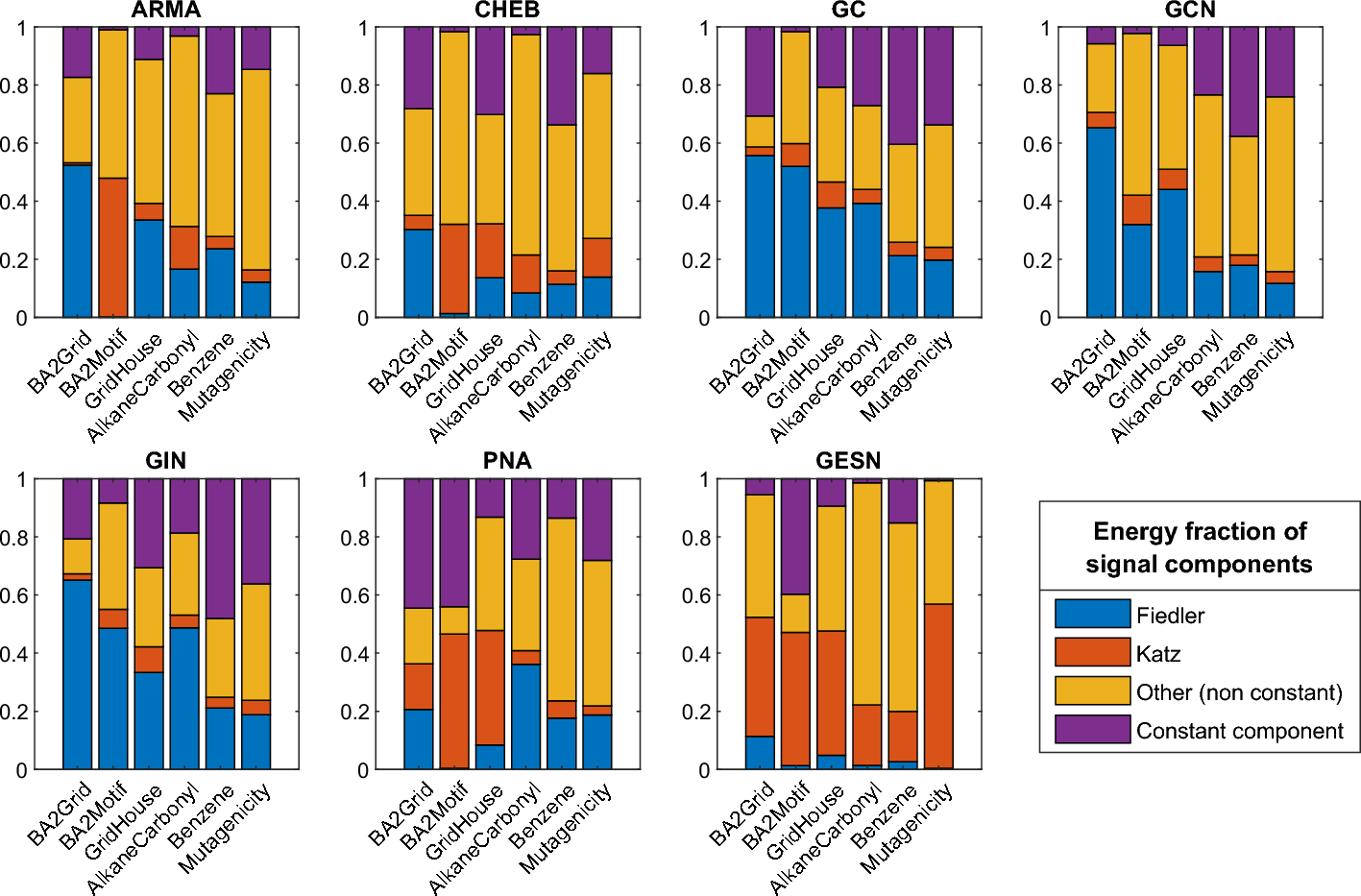

Understanding the inductive bias of Deep Graph Networks (DGNs) is crucial because it reveals how these models generalize from training data to unseen data. Discovering these learning assumptions, and their alignment to the task’s characteristics, allows informed architectural design choices and facilitates interpretation. With this goal, we analyze the different inductive biases of DGNs by relating the node-level explanations produced by explainable AI (XAI) methods to known network science measures of lower-order (local) and higher-order (increasingly global) connectivity. We then apply graph signal processing to refine this analysis at the granularity of the graph frequency spectrum spanned by the explanation signals. Our main finding is that different DGNs focus on different regions of the graph frequency spectrum, and in particular, high-frequency DGNs generalize by focusing on lower-order graph connectivity, while low-frequency DGNs generalize by recognizing higher-order graph structures. This characterization is first derived on synthetic benchmarks by showing that explanations align with network science measures sitting at the two extremes of the spectrum (Katz centrality in the high frequencies, and Fiedler eigenvector scores in the low frequencies). Moving to real-world chemical benchmarks, this result is generalized by showing that inductive biases do indeed lie on a continuum that corresponds to sub-regions of the frequency spectrum.

Recommended citation: M. Fontanesi, A. Micheli, M. Podda, D. Tortorella (2025). "Bridging XAI and spectral analysis to investigate the inductive biases of deep graph networks." Machine Learning, vol. 114, 257.

Read Paper