Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Future Blog Post

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Blog Post number 4

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 3

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 2

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 1

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

publications

Dynamic Graph Echo State Networks

Published in ESANN, 2021

Preliminary experiments on temporal graph classification with DynGESN, a novel reservoir computing model for dynamic graphs.

Recommended citation: D. Tortorella, A. Micheli (2021). "Dynamic Graph Echo State Networks." Proceedings of the 29th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2021), pp. 99-104.

Read Paper

Discrete-Time Dynamic Graph Echo State Networks

Published in Neurocomputing, 2022

DynGESN is introduced as a novel reservoir computing model for temporal graphs. More efficient then temporal graph kernels and 100x faster than temporal GNNs.

Recommended citation: A. Micheli, D. Tortorella (2022). "Discrete-Time Dynamic Graph Echo State Networks." Neurocomputing, vol. 496, pp. 85-95.

Read Paper

Spectral Bounds for Graph Echo State Network Stability

Published in IJCNN, 2022

More accurate stability bounds for GESN based on graph spectral properties.

Recommended citation: D. Tortorella, C. Gallicchio, A. Micheli (2022). "Spectral Bounds for Graph Echo State Network Stability." Proceedings of the 2022 International Joint Conference on Neural Networks.

Read Paper

Hierarchical Dynamics in Deep Echo State Networks

Published in ICANN, 2022

An in-depth theoretical analysis of asymptotic dynamics in Deep ESNs with different contractivity hierarchies.

Recommended citation: D. Tortorella, C. Gallicchio, A. Micheli (2022). "Hierarchical Dynamics in Deep Echo State Networks." Proceedings of the 31st International Conference on Artificial Neural Networks (ICANN 2022), pp. 668-679.

Read Paper

Beyond Homophily with Graph Echo State Networks

Published in ESANN, 2022

Preliminary experiments on heterophilic node classification with GESN, showing the effectiveness of going beyond stability constraints.

Recommended citation: D. Tortorella, A. Micheli (2022). "Beyond Homophily with Graph Echo State Networks." Proceedings of the 30th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2022), pp. 491-496.

Read Paper

Leave Graphs Alone: Addressing Over-Squashing without Rewiring

Published in LoG, 2022

GESN achieves a significantly better accuracy on six heterophilic node classification tasks via tuning Lipschitz constants instead of resorting to graph rewiring.

Recommended citation: D. Tortorella, A. Micheli (2022). "Leave Graphs Alone: Addressing Over-Squashing without Rewiring" (Extended Abstract). Presented at the First Learning on Graphs Conference (LoG 2022), Virtual Event, December 9–12, 2022.

Read Paper

Addressing Heterophily in Node Classification with Graph Echo State Networks

Published in Neurocomputing, 2023

Node-level GESN is highly effective for heterophilic node classification tasks, while also being efficient and resilient to over-smoothing.

Recommended citation: A. Micheli, D. Tortorella (2023). "Addressing Heterophily in Node Classification with Graph Echo State Networks." Neurocomputing, vol. 550, 126506.

Read Paper

Minimum Spanning Set Selection in Graph Kernels

Published in GbRPR, 2023

Minimizing the number of support vectors in SVM without any loss of accuracy via an RRQR factorization of kernel matrix.

Recommended citation: D. Tortorella, A. Micheli (2023). "Minimum Spanning Set Selection in Graph Kernels." Graph-Based Representations in Pattern Recognition. GbRPR 2023. LNCS vol. 14121, pp. 15-24.

Read Paper

Richness of Node Embeddings in Graph Echo State Networks

Published in ESANN, 2023

Preliminary analysis of GESN’s node embedding richness via entropy and numerical analysis metrics.

Recommended citation: D. Tortorella, A. Micheli (2023). "Richness of Node Embeddings in Graph Echo State Networks." Proceedings of the 31st European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2023), pp. 11-16.

Read Paper

Entropy Based Regularization Improves Performance in the Forward-Forward Algorithm

Published in ESANN, 2023

Adding a representation entropy term into the loss of Hinton’s FFA improves accuracy.

Recommended citation: M. Pardi, D. Tortorella, A. Micheli (2023). "Entropy Based Regularization Improves Performance in the Forward-Forward Algorithm." Proceedings of the 31st European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2023), pp. 393-398.

Read Paper

Designs of Graph Echo State Networks for Node Classification

Published in Neurocomputing, 2024

Analysis of dense and sparse reservoir designs for node-level GESN via topology-dependent and topology-agnostic richness measures for node embeddings.

Recommended citation: A. Micheli, D. Tortorella (2024). "Designs of Graph Echo State Networks for Node Classification." Neurocomputing, vol. 597, 127965.

Read Paper

Continuously Deep Recurrent Neural Networks

Published in ECML PKDD, 2024

A continuous-depth ESN is proposed, where a smooth depth hyperparameter regulates the extent of local connections.

Recommended citation: A. Ceni, P. F. Dominey, C. Gallicchio, A. Micheli, L. Pedrelli, D. Tortorella (2024). "Continuously Deep Recurrent Neural Networks." Machine Learning and Knowledge Discovery in Databases: Research Track. ECML PKDD 2024. LNCS vol. 14947, pp. 59-73.

Read Paper

Onion Echo State Networks: A Preliminary Analysis of Dynamics

Published in ICANN, 2024

A preliminary analysis of dynamical properties of Onion ESN, a novel reservoir with groups of units presentig an annular spectrum.

Recommended citation: D. Tortorella, A. Micheli (2024). "Onion Echo State Networks: A Preliminary Analysis of Dynamics." Proceedings of the 33rd International Conference on Artificial Neural Networks (ICANN 2024), LNCS vol. 15025, pp. 117-128.

Read Paper

Continual Learning with Graph Reservoirs: Preliminary experiments in graph classification

Published in ESANN, 2024

GESN relieves part of catastrophic forgetting in the continual learning setting by avoiding training representations for graph classification.

Recommended citation: D. Tortorella, A. Micheli (2024). "Continual Learning with Graph Reservoirs: Preliminary experiments in graph classification." Proceedings of the 32nd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2024), pp. 35-40.

Read Paper

Analyzing Explanations of Deep Graph Networks through Node Centrality and Connectivity

Published in Discovery Science, 2024

We analyze the alignment of DGNs explanations to node centrality and graph connectivity, highlighting the presence of different inductive biases.

Recommended citation: M. Fontanesi, A. Micheli, M. Podda, D. Tortorella (2025). "Analyzing Explanations of Deep Graph Networks through Node Centrality and Connectivity." Proceedings of the 27th International Conference on Discovery Science (DS 2024), LNCS vol. 15243, pp. 295-309.

Read Paper

Encoding Graph Topology with Randomized Ising Models

Published in ESANN, 2025

We propose a RIM, a new model for encoding graph topology that is ameanable to physical implementation on non-conventional hardware.

Recommended citation: D. Tortorella, A. Brau, A. Micheli (2025). "Encoding Graph Topology with Randomized Ising Models." Proceedings of the 33rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2025), pp. 271-276.

Read Paper

Robustness in Protein-Protein Interaction Networks: A Link Prediction Approach

Published in ESANN, 2025

Reframing the prediction of dynamical properties of biochemical pathways as a link prediction task over the protein-protein interaction networks (PPINs).

Recommended citation: A. Dipalma, D. Tortorella, A. Micheli (2025). "Robustness in Protein-Protein Interaction Networks: A Link Prediction Approach." Proceedings of the 33rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN 2025), pp. 277-282.

Read Paper

An Empirical Evaluation of Rewiring Approaches in Graph Neural Networks

Published in Pattern Recognition Letters, 2025

We propse an evaluation framework for graph rewiring methods based on training-free GNNs. In our fair conditions, rewiring rarely improves message-passing on 12 node and graph classification tasks.

Recommended citation: A. Micheli, D. Tortorella (2025). "An Empirical Evaluation of Rewiring Approaches in Graph Neural Networks." Pattern Recognition Letters, vol. 196, pp. 134–141.

Read Paper

An Empirical Investigation of Shortcuts in Graph Learning

Published in GbRPR, 2025

We investigate shortcut learning in graphs by experimenting with adding training counterfactuals and shifting inductive bias.

Recommended citation: D. Tortorella, M. Fontanesi, A. Micheli, M. Podda (2025). "An Empirical Investigation of Shortcuts in Graph Learning." Graph-Based Representations in Pattern Recognition. GbRPR 2025. LNCS vol. 15727, pp. 147-156.

Read Paper

Investigating Time-Scales in Deep Echo State Networks for Natural Language Processing

Published in ICANN, 2025

An analysis of time-scales in Deep Bidirectional ESNs applied to sentence-level and token-level NLP tasks.

Recommended citation: C. Baccheschi, A. Bondielli, A. Lenci, A. Micheli, L. Passaro, M. Podda, D. Tortorella (2025). "Investigating Time-Scales in Deep Echo State Networks for Natural Language Processing." Artificial Neural Networks and Machine Learning. ICANN 2025 International Workshops and Special Sessions, LNCS vol. 16072, pp. 188-200.

Read Paper

Bridging XAI and spectral analysis to investigate the inductive biases of deep graph networks

Published in Machine Learning, 2025

We apply graph signal processing to node importances of XAI explanations to refine the characterization of DGN inductive biases.

Recommended citation: M. Fontanesi, A. Micheli, M. Podda, D. Tortorella (2025). "Bridging XAI and spectral analysis to investigate the inductive biases of deep graph networks." Machine Learning, vol. 114, 257.

Read Paper

Efficient quantification on large-scale networks

Published in Machine Learning, 2025

We propose XNQ, an efficient model for network quantification that scales on large networks and is resilient to heterophily.

Recommended citation: A. Micheli, A. Moreo, M. Podda, F. Sebastiani, W. Simoni, D. Tortorella (2025). "Efficient quantification on large-scale networks." Machine Learning, vol. 114, 270.

Read Paper

Randomized Ising models for graph node representation

Published in Neurocomputing, 2026

RIM4G is a new model for graph node representation that is ameanable to physical implementation on non-conventional hardware.

Recommended citation: M.G. Berni, A. Brau, A. Micheli, D. Tortorella (2026). "Randomized Ising models for graph node representation." Neurocomputing, vol. 679, 133195.

Read Paper

research

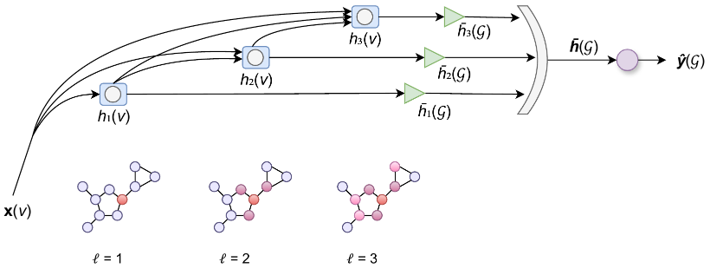

Deep Learning on Graphs

Short description of portfolio item number 1

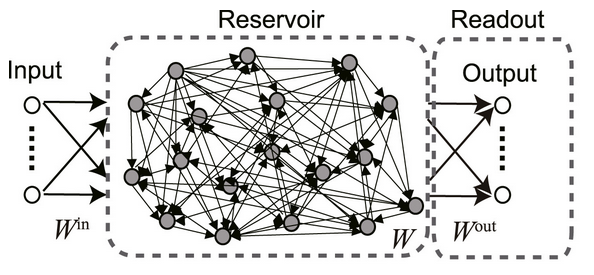

Reservoir Computing

Short description of portfolio item number 1

talks

Talk 1 on Relevant Topic in Your Field

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Conference Proceeding talk 3 on Relevant Topic in Your Field

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

teaching

TA for the Machine Learning course (fall 2022)

Master degree course, University of Pisa, Department of Computer Science, 2022

Supporting the students in matters concerning the course project.

TA for the Machine Learning course (fall 2024)

Master degree course, University of Pisa, Department of Computer Science, 2024

Supporting the students in matters concerning the course project.

TA for the Machine Learning course (fall 2025)

Master degree course, University of Pisa, Department of Computer Science, 2025

Supporting the students in matters concerning the course project.

Laboratorio I course (spring 2026)

Bachelor degree course, University of Pisa, Department of Computer Science, 2025

JavaScript and TypeScript programming languages; imperative, functional, and object-oriented programming; and the main development tools supporting programming, such as debuggers, versioning systems, and testing.